How Two Tech Giants Are Shaping the Future of AI and Accessibility

In the high-stakes race for dominance in AI and high-performance computing (HPC), AMD and NVIDIA are set to clash with their latest innovations: the AMD MI350 and NVIDIA Blackwell chips. This showdown isn’t just about raw power—it’s about redefining accessibility, efficiency, and the very fabric of technological progress. Let’s dive into how these next-gen chips stack up, why they matter for industries like healthcare and low-vision assistive technologies, and what their rivalry means for the future of computing.

The Contenders: AMD MI350 and NVIDIA Blackwell

AMD MI350: Touted as a “game-changer” by CEO Dr. Lisa Su, the MI350 is AMD’s answer to the growing demand for AI-optimized hardware. Slated for a 2025 release, this chip promises advanced parallel processing, improved energy efficiency, and a focus on democratizing AI infrastructure. With its 7nm process and enhanced memory bandwidth, the MI350 targets data centers, cloud computing, and even edge devices—making it a versatile contender110.

NVIDIA Blackwell: NVIDIA’s Blackwell architecture, expected to debut at CES 2025 with the RTX 5000 series, aims to cement NVIDIA’s lead in AI acceleration. Blackwell addresses past criticisms of VRAM limitations and power consumption, offering up to 16GB base memory and refined thermal management. Designed for both gaming and enterprise AI workloads, Blackwell is NVIDIA’s bid to maintain its 90% market share in AI hardware26.

Why This Battle Matters

The competition between AMD and NVIDIA isn’t just a tech enthusiast’s playground—it’s a catalyst for innovation. For low-vision users, advancements in AI-driven visual processing could revolutionize assistive technologies. Imagine real-time image recognition chips powering smart glasses that describe surroundings or apps that convert text to speech seamlessly. Both AMD and NVIDIA are indirectly fueling these possibilities through their focus on AI efficiency34.

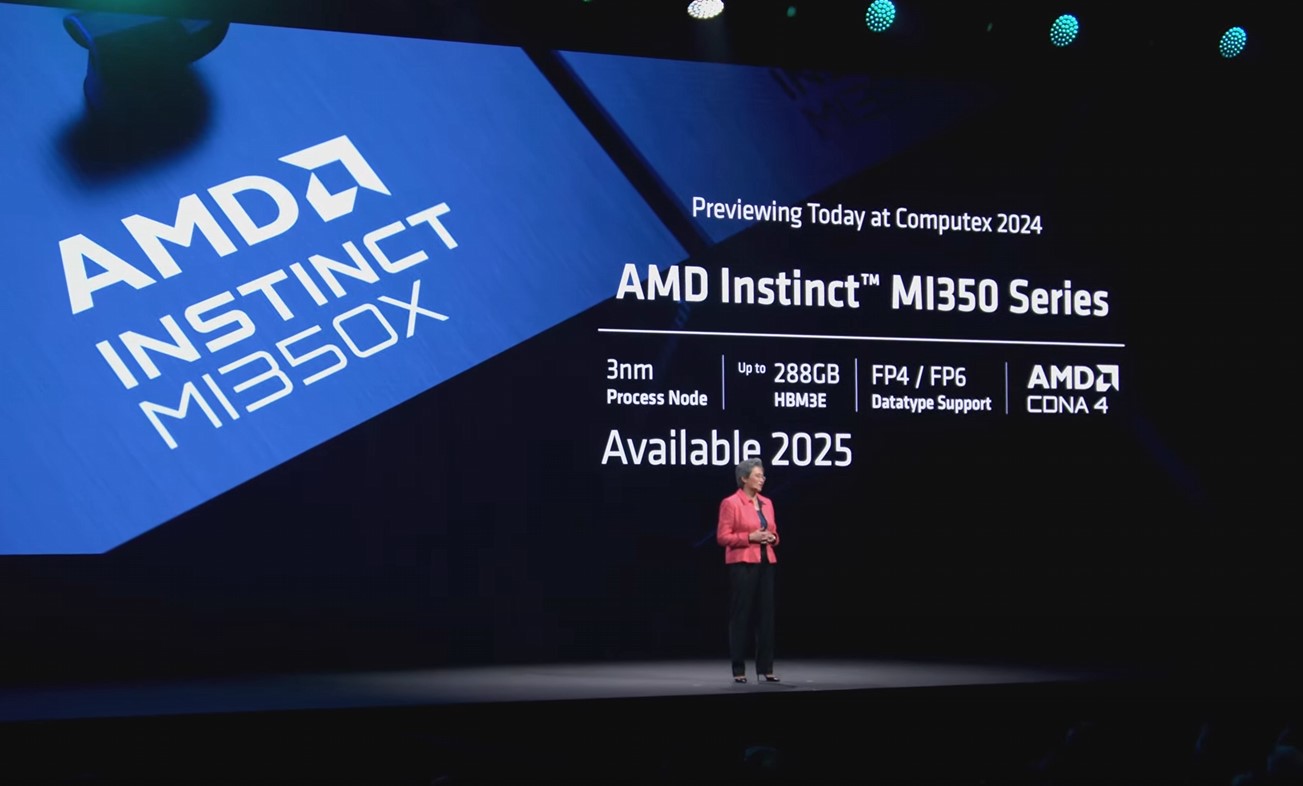

AMD Computex 2024 Keynote AMD Instinct MI350X

For businesses, the stakes are equally high. AMD’s MI350, with its lower cost-per-performance ratio, could disrupt NVIDIA’s dominance in data centers. Meanwhile, NVIDIA’s Blackwell ecosystem—CUDA, NVLink, and proprietary software—remains a formidable moat. As Dr. Su noted, “AI investment cycles will remain strong,” and both companies are vying to capture this trillion-dollar market712.

As 2025 approaches, the AMD MI350 and NVIDIA Blackwell B200 are set to dominate the GPU landscape, driving advancements in AI, HPC, and even assistive technologies for low vision users. These chips represent the pinnacle of data center performance, and their rivalry will shape the tech industry’s trajectory. This report compares the MI350 with NVIDIA’s most competitive offering in 2025—the B200—focusing on their specifications, performance, and potential applications.

AMD MI350: Background and Specifications

The AMD MI350, part of the Instinct series, is expected to launch in 2025, leveraging the CDNA4 architecture and a cutting-edge 3nm process. According to AMD Instinct MI350 Series: Next-Generation Data Center GPUs, its key specifications include:

- Memory Capacity: 288GB HBM3E

- Memory Bandwidth: Approximately 22.1 TB/s

- FP16 Performance: Estimated at 3,000-4,000 TFLOPS

- AI Inference Performance: Claimed to be 35 times higher than the MI300X

The MI350 is designed for memory-intensive tasks, such as training large language models and running complex scientific simulations, positioning it as a leader in data-heavy workloads.

NVIDIA Blackwell Series and the B200

NVIDIA’s Blackwell series, unveiled at the 2024 GTC conference, succeeds the Hopper architecture, with the B200 as its flagship GPU. Per NVIDIA Blackwell Platform Arrives to Power a New Era of Computing | NVIDIA Newsroom, the B200’s specs include:

- Architecture: Blackwell

- Process Node: 4nm

- Memory: 192GB HBM3E

- Memory Bandwidth: 8 TB/s

- FP16 Performance: Around 5,000 TFLOPS with sparse computing, approximately 2,500 TFLOPS in dense computing (based on Tom’s Hardware: Nvidia’s next-gen AI GPU revealed)

- AI Inference Performance: Up to 30 times faster than the H100, with 25x better energy efficiency

Scheduled for an early 2025 release, the B200 targets similar markets as the MI350, excelling in AI inference and HPC applications.

Performance Comparison and Analysis

Here’s a detailed comparison of the two chips:

| Feature | AMD MI350 (Estimated) | NVIDIA Blackwell B200 |

|---|---|---|

| Architecture | CDNA4 | Blackwell |

| Process Node | 3nm | 4nm |

| Memory Capacity | 288GB HBM3E | 192GB HBM3E |

| Memory Bandwidth | ~22.1 TB/s | 8 TB/s |

| FP16 Performance (Dense) | 3,000-4,000 TFLOPS | ~2,500 TFLOPS |

| FP16 Performance (Sparse) | Unknown | ~5,000 TFLOPS |

| AI Inference Improvement | 35x over MI300X | 30x over H100 |

The MI350 clearly outpaces the B200 in memory capacity and bandwidth, making it a powerhouse for tasks requiring extensive data handling, such as large-scale AI model training. However, the B200’s FP16 performance, peaking at 5,000 TFLOPS with sparsity optimizations, suggests it could excel in compute-intensive AI inference workloads. Its dense FP16 performance of approximately 2,500 TFLOPS is slightly lower than the MI350’s estimated range, but NVIDIA’s expertise in sparse computing could tilt the scales in specific scenarios.

Additionally, the B200’s 25x energy efficiency improvement over the H100 enhances its appeal for cost-conscious data centers, whereas MI350 energy efficiency data remains undisclosed. The true winner will emerge from real-world benchmarks in 2025, reflecting their performance across diverse applications.

Potential in Low Vision Assistive Technologies

Both the MI350 and B200 have significant potential in low vision assistive technologies. Their computational capabilities can power real-time image processing, enhancing contrast and brightness to provide enhanced visual clarity for low vision users. The B200’s superior AI inference performance could drive AI-powered visual aids, such as audio descriptions and object recognition, while the MI350’s high memory capacity supports processing complex visual datasets, advancing low vision assistive technology development.

Conclusion and Outlook

In 2025, NVIDIA’s Blackwell B200 stands as the most direct competitor to the AMD MI350, with both chips vying for dominance in AI and HPC markets. The B200 offers advantages in compute performance and energy efficiency, while the MI350 excels in memory capacity and bandwidth. Their ultimate performance will depend on specific use cases and benchmark results, particularly in supporting AI-driven visual aids and enhanced visual clarity for low vision applications. As these chips roll out, they promise to redefine data center capabilities and accessibility solutions alike.

The Accessibility Angle: Bridging the Gap for Low-Vision Users

While neither chip is marketed explicitly for accessibility, their architectures unlock potential for low-vision assistive technologies. AMD’s MI350, with its high-throughput memory and optimized AI pipelines, could power devices that process visual data faster—think apps that magnify text or detect obstacles in real time. NVIDIA’s Blackwell, with its superior ray-tracing capabilities, might enhance tactile feedback systems or immersive audio navigation tools.

Critically, both companies are investing in software ecosystems. AMD’s ROCm platform and NVIDIA’s CUDA libraries are essential for developers creating adaptive technologies. As one analyst put it, “The real winner here is the end user—whether they’re a gamer, a researcher, or someone relying on AI to navigate the world.

Market Impact and Future Trends

AMD’s aggressive pricing and open-source software approach could attract cost-sensitive enterprises, especially in healthcare and education. For instance, hospitals using MI350-powered systems might deploy AI diagnostics tools more affordably. Meanwhile, NVIDIA’s Blackwell is likely to dominate premium sectors like autonomous vehicles and metaverse development.

Looking ahead, 2025 will be pivotal. AMD’s MI400, slated for 2026, and NVIDIA’s rumored “Blackwell+” refresh suggest this rivalry is far from over. As demand for AI grows, so does the need for chips that balance power, accessibility, and sustainability—a challenge both companies are racing to meet. For more readings, please look at Zoomax’blog.

FAQs:

1. What are the key differences between the AMD MI350 and NVIDIA Blackwell chips?

- Answer: The AMD MI350 is built using a 3nm process, features 288GB of HBM3E memory, and offers an impressive memory bandwidth of around 22.1 TB/s. It is optimized for memory-intensive tasks and large-scale AI training. In contrast, NVIDIA’s Blackwell B200, produced on a 4nm process, provides 192GB of HBM3E memory with 8 TB/s bandwidth. Its FP16 performance, especially under sparse computing, can reach approximately 5,000 TFLOPS, making it highly effective for AI inference and compute-heavy tasks.

2. How will these chips impact the AI and high-performance computing (HPC) industries?

- Answer: Both chips are poised to drive significant advancements in AI and HPC. The MI350’s high memory capacity and bandwidth make it ideal for training large AI models and running complex simulations. Meanwhile, the Blackwell B200’s strength in AI inference and energy efficiency could lead to lower operational costs for data centers. Their competition is expected to foster innovation in the trillion-dollar AI market and further boost high-performance computing capabilities.

3. What potential benefits do these chips offer for low-vision assistive technologies?

- Answer: The advanced AI capabilities of these chips can power real-time image processing, improved contrast, and enhanced object recognition. Such functionalities are crucial for developing assistive devices like smart glasses, text magnification apps, and audio description tools that help low-vision users navigate their environments more effectively.

4. How might these new products affect the market, and what are the respective advantages of AMD and NVIDIA?

- Answer: AMD’s MI350, with its competitive cost-performance ratio and superior memory specs, may disrupt NVIDIA’s stronghold in data centers, especially for cost-sensitive applications in healthcare and education. On the other hand, NVIDIA’s robust software ecosystem (including CUDA and NVLink) and notable energy efficiency make the Blackwell B200 a strong contender in premium sectors such as autonomous vehicles and metaverse development. This rivalry is set to reshape market dynamics as both companies vie for dominance.

5. What are the future trends for AI chip development?

- Answer: As the AI landscape evolves, future chips will need to balance raw computational power with energy efficiency and accessibility. Beyond the 2025 releases of the MI350 and Blackwell B200, expectations include AMD’s upcoming MI400 in 2026 and NVIDIA’s potential “Blackwell+” series. These advancements will likely further integrate AI, HPC, and assistive technologies, driving innovation across multiple industries.

References

AMD CEO Confirms MI350’s 2025 Launch to Compete with NVIDIA Blackwell. (2024, July 31). MyDrivers. https://news.mydrivers.com/1/994/994612.htm 1

NVIDIA Blackwell: A Deep Dive into the Next-Gen GPU. (2024, November 6). Sina Finance. https://t.cj.sina.com.cn/articles/view/5953190035/162d678930190136tu 2

AMD MI350 vs. Blackwell: Reshaping High-Performance Computing. (2024, July 31). Sohu. https://www.sohu.com/a/797378236_121924584 3

The AI Data Center Boom: AMD’s Path to Compete with NVIDIA. (2025, January 6). Sohu. https://www.sohu.com/a/846001582_121902920 6

AMD’s MI350 Chip: A Catalyst for Server Market Growth. (2025, February 5). Sohu. https://www.sohu.com/a/856501657_121924584 10